I have configured promtail as a daemon in ECS service and want to push logs to loki server. My service is running but it is giving me the given error. I have created the promtail directory in the Ec2 instance where my service is running with all the root permission. Can any one tell me how we can mount it and what permission is required. In short How to resolve this error?

level=error ts=2023-08-11T06:49:47.893922014Z caller=positions.go:179 msg="error writing positions file" error="open /promtail/.positions.yaml8810490207583563416: no such file or directory"

Please share your ECS task definition and promtail configuration.

1 Like

promtail-dev-revision1.json (1.6 KB)

###promtail-config.yml file###

server:

http_listen_address: 0.0.0.0

http_listen_port: 9080

positions:

filename: /mnt/config/positions.yaml

clients:

scrape_configs:

-

job_name: containers

static_configs:

- targets:

- grafana

labels:

job: containerlogs

path: /var/lib/docker/containers/*/*log

pipeline_stages:

-

json:

expressions:

stream: stream

attrs: attrs

tag: attrs.tag

-

regex:

expression: (?P<image_name>(?:[^|][^|])).(?P<container_name>(?:[^|][^|])).(?P<image_id>(?:[^|][^|])).(?P<container_id>(?:[^|][^|]))

source: “tag”

-

labels:

tag:

stream:

image_name:

container_name:

image_id:

container_id:

We want that Log driver push the logs of ECS service running on my EC2 machine to loki server. And we can query the logs from loki server. As a backing / backup we are pushing the logs to S3.

Your promtail configuration looks fine, but your error did not look promtail related, hence why I asked for ECS task definition.

1 Like

Thanks a lot for your kind help @tonyswumac . Is there any solution as I had attached the task definition Json file also. promtail-dev-revision1.json.

How promtail running as daemon on Ec2 machine can get the idea that It has to capture the logs of all the ECS service running on the same Ec2? After that it is pushing to my loki server (the loki Client url is given)What should I specify for that in my promtail configuration?

I was looking for your promtail’s ECS task definition, not the promtail configuration itself.

From looking at your error, it may be that you are not mounting /promtail directory into your DAEMON container correctly.

1 Like

Thank you @tonyswumac. I changed the mount point and the error got resolved.

change the mount point to:

mountPoints": [

{

“sourceVolume”: “promtail”,

“containerPath”: “/mnt/promtail”

}

“volumes”: [

{

“name”: “promtail”,

“host”: {

“sourcePath”: “/root/promtail”

}

}

]

I did above change and it is not showing the error related to directory.

How promtail running as daemon on Ec2 machine can get the idea that It has to capture the logs of all the ECS service running on the same Ec2? After that it is pushing to my loki server (the loki Client url is given)What should I specify for that in my promtail configuration?

Hi @tonyswumac,

I have tried the following promtail-config to scrape the logs of ECS. But I am not getting proper labels in grafana. I want labels like container-id, container-name, task-definition, service-name. Following is my promtail-configuration file. Kindly help me with scrape_configs for getting all the mentioned labels of ECS service. It would be great if you can help me with proper scrape_configs, SO that I can finish with my task. Rest other issues have been resolved. Thank you @tonyswumac for helping this out.

server:

http_listen_address: 0.0.0.0

http_listen_port: 9080

positions:

filename: /mnt/positions.yaml

sync_period: 10s

ignore_invalid_yaml: false

clients:

scrape_configs:

Check your actual docker logs and see what the attrs field looks like. Make sure it has the data you are actually looking for, and your regex actually works (your regex doesn’t look right, actually).

1 Like

Hi @tonyswumac we sorted out the meta data part of ECS. Only thing which is require is we need private ip of our Ec2 servers as well as a log so we have to add meta data of ec2 as well in scrape configs. Is there any way of combining docker sd config and ec2 sd service in a single promtail? We tried many possibilities and did trial but not able to get the logs in grafana. Rest we are getting logs of our ecs services in grafana with desired filters as well.

Thanks for the help again.

You can use the external_labels in promtail to set default labels. This is our standard set of labels for all promtail agents:

clients:

- url: <LOKI_ENDPOINT>

external_labels:

aws_account_alias: {{ aws_account_alias }}

aws_account_id: {{ aws_account_id }}

aws_ecs_cluster_name: {{ aws_ecs_cluster_name }}

aws_ec2_instance_id: {{ ansible_ec2_instance_id }}

aws_region: {{ aws_region }}

And you can hardcode the values through userdata / bootstrap script or whatever solution you guys are using for configuration management (we use Ansible, which you might’ve guessed from the {{ }} notation).

1 Like

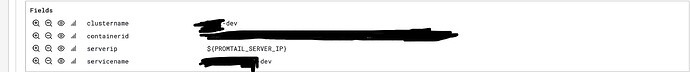

Thanks @tonyswumac I will pass environment variable for grabbing the IP of Ec2 instance. can you tell me how we can grab the private ip of the instance on which the ECS is running along with docker field like clustername, containerid and servicename. we are getting clustername, containerid and servicename in grafana under the field but we want private ip also. These 3 fields are obtained because we have added in relabel configs for docker sd metadata.

You should be able to get that from EC2 metadata.

We are adding it but it is not giving Private_IP as an output. below is my config file. We have tried many different meta data including EC2 metadata. We simply need private ip of Ec2 instance on which our ECS is running rest data is available in grafana. The screenshot is attached with. We got clustername, containerid and servicename (From the meta data of ECS). But in server ip I am getting the environment variable not the actual IP of EC2 instance.

server:

http_listen_address: 0.0.0.0

http_listen_port: 9080

positions:

filename: /root/promtail/positions.yaml

sync_period: 10s

ignore_invalid_yaml: false

clients:

url: This site is not available in your country

external_labels:

serverip: ${PROMTAIL_SERVER_IP}

scrape_configs:

job_name: docker_scrape

docker_sd_configs:

- host: unix:///var/run/docker.sock

refresh_interval: 5s

relabel_configs:

- source_labels: [‘__meta_docker_container_label_com_amazonaws_ecs_container_name’]

target_label: ‘servicename’

- source_labels: [‘__meta_docker_container_id’]

target_label: ‘containerid’

- source_labels: [‘__meta_docker_container_label_com_amazonaws_ecs_cluster’]

target_label: ‘clustername’

As previously mentioned, you can get the private IP from EC2 metadata. You then need to inject the value into your configuration file when you place the configuration file, however you are doing it currently.

1 Like

Thanks @tonyswumac . In any case promtail daemon stops sending then is there any retry mechanism in case of it. Why live logs are not working in grafana as far as promtail is concern. We are getting clustername, containerid, servicename and Ip address.

Thanks again for the help.

Hi @tonyswumac ,

We want to automate the configuration for setting up promtail in our production environment on AWS. Do you have any repository from where we can take reference of how we can perform this task using ansible. Ansible playbooks details or any related reference then please share with me. We want to push the configuration changes from gitlab to ansible server which will run on Ec2 machine and it will reflect all the changes on all the connected host or host can evaluate the any changes made and update configuration/application accordingly.

Here is an example how you can use SSM + Ansible to place configuration files on your ECS hosts: GitHub - tonyswu/terraform-ssm-ansible-example

This is outside of the scope for this forum bit, please feel free to ping me offline through private message if you’d like.

1 Like

Hi @tonyswumac ,

We are running a loki server on an ec2 instance with 8 cpu and 16gb ram but the server is not running properly. Loki is installed as a service on instance and we are pushing logs of 2 clusters on loki and taking back up to s3 bucket using promtail. In grafana we are sometime getting error like failed to call the resource even if loki server is running fine.

Every time we have to stop the loki instance and start again but this is not the idea solution right we want stable setup for loki.

can you please help me in this regard?