@yosiasz Thank you for trying to help

Here is a sample Splunk SPL search that I am running

index=“servicenow_pub” sourcetype=“snow:incident” source=“``https://now.placeholder.com/”`` dv_opened_by=“SA SPLUNK” dv_assignment_group=“*”

| stats

earliest(_time) as _time,

earliest(dv_state) as dv_state_earliest,

earliest(dv_assignment_group) as dv_assignment_group,

earliest(dv_sys_mod_count) as dv_sys_mod_count by dv_number

| search dv_sys_mod_count<3 dv_state_earliest=“New”

| timechart count(dv_number) span=15m as Incident_Count by dv_assignment_group limit=5 partial=false

| head 10

The search will provide series “Incident_Count” of the top 5 values (limit=5) and all remaining values will be aggregated into “OTHER”. The final head 10 will discard all but the first 10 results (for this example).

Since the search will provide the top 5 distributors, the resulting column names are dynamic and depend on the data. The count of columns is fixed (5 + OTHER).

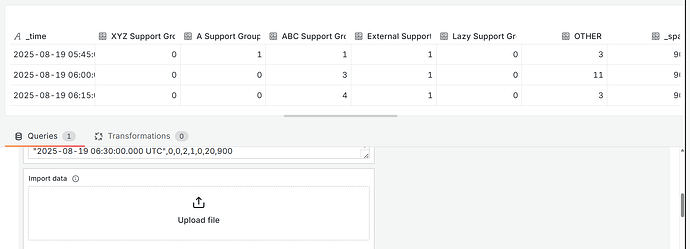

Here is an example of the resulting CSV …

“_time”,“XYZ Support Group”,“A Support Group”,“ABC Support Group”,“External Support Group ABC”,“Lazy Support Group”,OTHER,“_span”

“2025-08-19 05:45:00.000 UTC”,0,1,1,1,0,3,900

“2025-08-19 06:00:00.000 UTC”,0,0,3,1,0,11,900

“2025-08-19 06:15:00.000 UTC”,0,0,4,1,0,3,900

“2025-08-19 06:30:00.000 UTC”,0,0,2,1,0,20,900

“2025-08-19 06:45:00.000 UTC”,0,0,2,1,0,8,900

“2025-08-19 07:00:00.000 UTC”,1,0,0,1,0,12,900

“2025-08-19 07:15:00.000 UTC”,0,0,0,70,0,3,900

“2025-08-19 07:30:00.000 UTC”,1,1,0,3,0,4,900

“2025-08-19 07:45:00.000 UTC”,0,1,0,2,0,14,900

“2025-08-19 08:00:00.000 UTC”,0,0,0,2,0,2,900

And this is how I am using it all together with the Infinity plugin

… and this is the problem with the “Convert field type” transformer, I have to select the values by name that I need to convert. But the name, as explained, may change with every refresh.

I would expect either a wildcard selector, a RegEx selector, or a toggle that can do a “convert all to number” as default and I only convert “_time” into Time afterward.