Hi all,

Very new to Grafana, but enjoying it’s advantages over Kibana already!

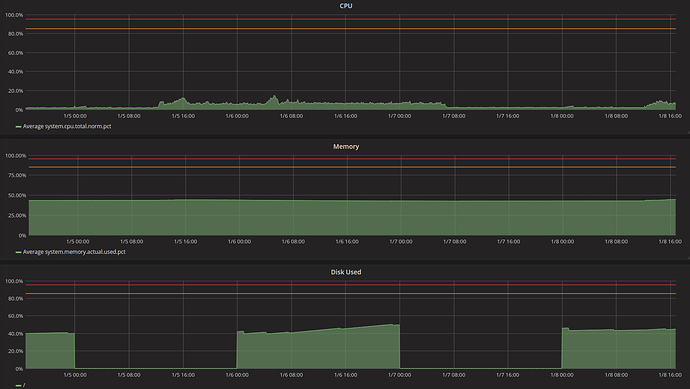

I created a table yesterday to tell me disk usage on ext4 file systems for a host, as selected by a $host variable. This morning, all the file system usage stats are 0% for all the machines in my cluster. I’ve found that past midnight (precisely), I’m getting values of 0 back from ES; however, running a similar query in Kibana is showing the values I would expect to see.

I had suspicions about the index patterns (indexes are daily), but they seem to line up in the JSON. To make matters even stranger, the CPU information that is coming from the same indexes is unaffected!

Any ideas?

Thanks,

Chris.

Tech stuff…

Grafana v4.6.3 (commit: 7a06a47) running on Centos 7.3

Elastic:

{

“name” : “v2qbguT”,

“cluster_name” : “stgmonitoring”,

“cluster_uuid” : “FY1onRioS26mFsqBSrp4wg”,

“version” : {

“number” : “6.1.0”,

“build_hash” : “c0c1ba0”,

“build_date” : “2017-12-12T12:32:54.550Z”,

“build_snapshot” : false,

“lucene_version” : “7.1.0”,

“minimum_wire_compatibility_version” : “5.6.0”,

“minimum_index_compatibility_version” : “5.0.0”

},

“tagline” : “You Know, for Search”

}

JSON request and response, as given by the query inspector, showing expected value just before midnight and the change to exactly 0 afterwards.

{

“xhrStatus”: “complete”,

“request”: {

“method”: “POST”,

“url”: “api/datasources/proxy/1/_msearch”,

“data”: “{"search_type":"query_then_fetch","ignore_unavailable":true,"index":["metricbeat-2017.12.20","metricbeat-2017.12.21"]}\n{"size":0,"query":{"bool":{"filter":[{"range":{"@timestamp":{"gte":"1513814310000","lte":"1513814430000","format":"epoch_millis"}}},{"query_string":{"analyze_wildcard":true,"query":"host: stgkafka01 AND system.filesystem.type: ext4"}}]}},"aggs":{"3":{"terms":{"field":"system.filesystem.mount_point.keyword","size":10,"order":{"_term":"desc"},"min_doc_count":1},"aggs":{"2":{"date_histogram":{"interval":"1m","field":"@timestamp","min_doc_count":1,"extended_bounds":{"min":"1513814310000","max":"1513814430000"},"format":"epoch_millis"},"aggs":{"1":{"avg":{"field":"system.filesystem.used.pct"}}}}}}}}\n”

},

“response”: {

“responses”: [

{

“took”: 11,

“timed_out”: false,

“_shards”: {

“total”: 10,

“successful”: 10,

“skipped”: 0,

“failed”: 0

},

“hits”: {

“total”: 4,

“max_score”: 0,

“hits”:

},

“aggregations”: {

“3”: {

“doc_count_error_upper_bound”: 0,

“sum_other_doc_count”: 0,

“buckets”: [

{

“2”: {

“buckets”: [

{

“1”: {

“value”: 0.020999999716877937

},

“key_as_string”: “1513814340000”,

“key”: 1513814340000,

“doc_count”: 1

},

{

“1”: {

“value”: 0

},

“key_as_string”: “1513814400000”,

“key”: 1513814400000,

“doc_count”: 1

}

]

},

“key”: “/data”,

“doc_count”: 2

},

{

“2”: {

“buckets”: [

{

“1”: {

“value”: 0.09290000051259995

},

“key_as_string”: “1513814340000”,

“key”: 1513814340000,

“doc_count”: 1

},

{

“1”: {

“value”: 0

},

“key_as_string”: “1513814400000”,

“key”: 1513814400000,

“doc_count”: 1

}

]

},

“key”: “/”,

“doc_count”: 2

}

]

}

},

“status”: 200

}

]

}

}

It’s even possible to curl a doc out from ES directly and see a non-zero value that should aggregate to non-zero using average.

{

“_id”: “NwVqdmABrbvf57qXtT9e”,

“_index”: “metricbeat-2017.12.21”,

“_score”: 6.9077773,

“_source”: {

“@timestamp”: “2017-12-21T00:13:11.910Z”,

“@version”: “1”,

“beat”: {

“hostname”: “stgkafka01”,

“name”: “stgkafka01”,

“version”: “6.1.0”

},

“fields”: {

“env”: “staging”

},

“host”: “stgkafka01”,

“index-group”: “metricbeat”,

“metricset”: {

“module”: “system”,

“name”: “filesystem”,

“rtt”: 1015

},

“system”: {

“filesystem”: {

“available”: 42005876736,

“device_name”: “/dev/nbd0”,

“files”: 3055616,

“free”: 44522651648,

“free_files”: 3020359,

“mount_point”: “/”,

“total”: 49080274944,

“type”: “ext4”,

“used”: {

“bytes”: 4557623296,

“pct”: 0.0929

}

}

},

“tags”: [

“u’Kafka’”,

“beats_input_raw_event”

]

},

“_type”: “doc”

}