Hi

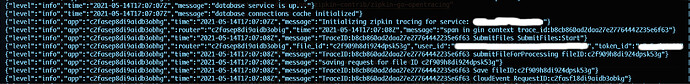

I’ve been working with Tempo for a few weeks now trying to get a solid POC. I am able to create my own spans using a zipkin tracer (i.e opentracing.StartSpan(“myspan”)) and retrieve them using the Grafana UI that works. In our system, written in Golang, we are using Gin-gonic framework, this has a middleware option for tracing.

“github.com/gin-gonic/gin”

“github.com/opentracing-contrib/go-gin/ginhttp”

tracer := CreateMyZipkinTracer() // Here I create my tracer

r := gin.New()

r.Use(ginhttp.Middleware(tracer))

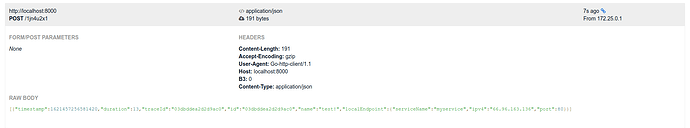

So I decided to use it and pass my tracer to it, send a POST/GET request and when extracting the TraceID from the span crated by Gin-Gonic middleware (in the same way that I do when creating my own spans), I noticed that the TraceID was 32 digits long and not 16 as they usually are (i.e. 0ba9b72392e0f36a ), so I wasn’t able to retrieve that TraceID from the UI.

Actually the TraceID is defined as a 128 bit number internally stored as 2x uint64 (high & low)

I have tried to use the lower part, high part and both but Tempo doesn’t retrieve the TraceID in the UI.

Why is Tempo not able to process these spans? Is there any configuration change to be made for this?

Thanks.