What Grafana version and what operating system are you using?

I’m running Grafana Mimir 2.11.0 on Linux, as a Docker container.

What are you trying to achieve?

I’m experimenting with Mimir and try to ingest data from Prometheus and show it in a Grafana dashboard.

How are you trying to achieve it?

I’m running Mimir through the following Docker compose file:

version: "3.4"

services:

mimir:

image: grafana/mimir:latest

command: ["-config.file=/etc/mimir.yaml"]

container_name: mimir

hostname: mimir

volumes:

- /container/mimir/config/mimir.yaml:/etc/mimir.yaml

- /container/mimir/config/alertmanager-fallback-config.yaml:/etc/alertmanager-fallback-config.yaml

ports:

- "9009:9009"

- "8080:8080"

restart: always

The file mimir.yaml contains this:

# Run Mimir in single process mode, with all components running in 1 process.

target: all,alertmanager,overrides-exporter

# Configure Mimir to use Minio as object storage backend.

common:

storage:

backend: s3

s3:

endpoint: minio.mydomain

access_key_id: {{ mimir_s3_access_key_id }}

secret_access_key: {{ mimir_s3_secret_access_key }}

bucket_name: mimir

# Blocks storage requires a prefix when using a common object storage bucket.

blocks_storage:

storage_prefix: blocks

tsdb:

dir: /data/ingester

# Use memberlist, a gossip-based protocol, to enable the 3 Mimir replicas to communicate

memberlist:

join_members: [mimir]

ingester:

ring:

replication_factor: 1

ruler:

rule_path: /data/ruler

alertmanager_url: http://127.0.0.1:8080/alertmanager

ring:

# Quickly detect unhealthy rulers to speed up the tutorial.

heartbeat_period: 2s

heartbeat_timeout: 10s

alertmanager:

data_dir: /data/alertmanager

fallback_config_file: /etc/alertmanager-fallback-config.yaml

external_url: http://localhost:9009/alertmanager

server:

log_level: warn

And alertmanager-fallback-config.yaml contains the standard sample content:

route:

group_wait: 0s

receiver: empty-receiver

receivers:

# In this example we're not going to send any notification out of Alertmanager.

- name: "empty-receiver"

Prometheus is configured to ingest into Mimir:

remote_write:

- url: http://host.mydomain:8080/api/v1/push

headers:

X-Scope-OrgID: test-tenant

What happened?

Ingestion from Prometheus apparently works, as I see data in Minio (it’s running for 3 days now) and the page ingester/tsdb/test-tenant shows Number of series: 10678. However, when I fetch the labels through curl -s --header "X-Scope-OrgID: test" http://host.mydomain:8080/prometheus/api/v1/label/__name__/values, I get only this:

{"status":"success","data":[]}

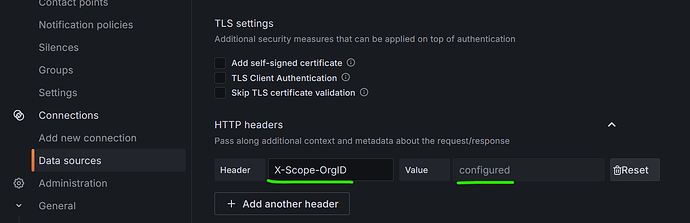

When I explore the data source in Grafana, it shows “(No metrics found)”.

What did you expect to happen?

See the metrics in Grafana and be able to build dashboards.

Did you receive any errors in the Grafana UI or in related logs?

None at all.

Did you follow any online instructions? If so, what is the URL?

Basically Get started with Grafana Mimir | Grafana Mimir documentation, but Grafana and Prometheus are spun up separately instead of through the compose file.