Hello,

We are using Loki in a single-binary/monolithic deployment and recently run into a problem that really grinds our gears.

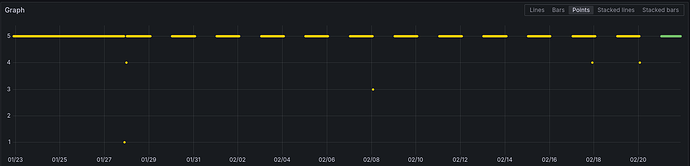

After a while each even day became unavailable for query while the odd days still available.

Loki is running in the official docker image with S3 backend and a quite simple configuration.

During the troubleshooting we also upgraded to 3.4.2 from 2.9.7, the issue persisted on both versions.

We are totally positive that the logs are there because if we follow these steps, for a short period of time all the logs are available, but disappear afterwards:

- stop the container

- remove the BoltDB and the TSDB active index directory and the cache location

- start the container

(Both the /loki directory that contains the WAL, the cache dirs mentioned above and the compactors’ dir, and the configuration are externally mounted and persisted between the containers.)

The only logged error messages between the working and the malfunctioning state are

level=error ts=2025-02-21T14:33:31.0367365Z caller=scheduler_processor.go:175 component=querier org_id=fake traceID=4d5f80d1cf51e883 msg="error notifying scheduler about finished query" err=EOF addr=127.0.0.1:9095

level=error ts=2025-02-21T14:33:31.036731034Z caller=scheduler_processor.go:111 component=querier msg="error processing requests from scheduler" err="rpc error: code = Canceled desc = context canceled" addr=127.0.0.1:9095

(That, I’ve read on community.grafana.com, are possibly unrelated.)

We’ve tried to reduce the cache_ttl to 1s and disable wherever it was possible, but nothing helped so far.

Any idea would be welcomed how to proceed with the troubleshooting!

The full configuration:

auth_enabled: false

server:

http_listen_port: 3100

log_level: error

common:

path_prefix: /loki

ring:

instance_addr: 127.0.0.1

kvstore:

store: inmemory

replication_factor: 1

storage:

s3:

bucketnames: B

endpoint: A

region: Z

access_key_id: Y

secret_access_key: X

storage_config:

boltdb_shipper:

active_index_directory: /loki/index

cache_location: /loki/index-cache

cache_ttl: 1s

tsdb_shipper:

active_index_directory: /loki/index-tsdb

cache_location: /loki/index-cache-tsdb

cache_ttl: 1s

limits_config:

max_entries_limit_per_query: 50000

query_timeout: 2m

allow_structured_metadata: false

compactor:

working_directory: /loki/compactor

schema_config:

configs:

- from: 2022-11-24

store: boltdb-shipper

object_store: s3

schema: v11

index:

prefix: index_

period: 24h

- from: 2025-02-21

object_store: s3

store: tsdb

schema: v13

index:

prefix: index_

period: 24h

ingester:

chunk_idle_period: 5m

chunk_retain_period: 30s

max_chunk_age: 5h

Context cancelled sometimes can be resources related, what is the CPU / memory you are allocating for your single loki instance?

You say if you restart container all logs come back, does that include the gaps I see in your graph from several days ago? If you run a query (say count_over_time) before and after logs disappear, what are the metrics you see? If you query for the gap specifically when results do you get?

Not exactly. Simply restarting the container is not enough, but index caches and active directories (or at least some of them) needs to be manually purged between stopping and starting the containers.

When I’ve got my logs back, I’ve got them back all. Even during the gaps. If I query specifically for a gap when the logs are missing, I’m receiving an empty list.

Interestingly this logcli call returns 0 results:

logcli query --from 2025-02-20T15:10:00Z --to 2025-02-20T23:59:59Z --limit 1000 '{nodename="docker-1"}'

while this one returns many (1000 with this limit)

logcli query --from 2025-02-20T15:10:00Z --to 2025-02-21T00:00:00Z --limit 1000 '{nodename="docker-1"}'

The second one ends 1 second after the first one and results many logs from 2025-02-20.

Forging a count_over_time({}[5m]) expression resulted valid results and AFTER this query the previously zero resulted query also returned with valid logs, but just for a while. After some minutes, like finishing this reply, count_over_time results points with the very same gaps again like the normal queries:

The host has 15GBs of RAM (+4GB SWAP) and 4 cores, but it shares the resources with other docker containers too. The hosts’s memory usage is usually around 15%, while the CPU usage is around 2,5-5% according to my collected metrics. The loki container itself uses around 300MB of memory most.

I’ve found that there would be no storage_config remaining in my config after disabling the index caches, so I’ve removed the whole section altogether.

The gaps are filled with data for like 2 minutes. After pressing the “Run query…” button in Grafana some times the gaps reappeared the

logcli query --from 2025-02-20T15:10:00Z --to 2025-02-20T23:59:59Z --limit 1000 '{nodename="docker-1"}'

query returned with 0 results again. (This query also worked in the first 2 minutes.)

Unfortunately I’ve got no access to S3 logs. We are using the S3 service from the OVH provider who provides no way (known by me) to access S3 logs. Once when I was asking for s3 audit logs, the support sent me to their legal team. ¯_(ツ)_/¯

Apparently, removing the cache location (alongside with other cache related options in storage_config) did not disable caching. So I’ve put it back and tried to set cache_ttl to “0s” instead. This does not disable caching, but reduce it’s validity. What is the right way to disable index caching completely?

(Results remained the same: after the container restart I can see all my logs for like 2 minutes when the gaps reappear.)

I am actually not sure. I’ve not had to do this.

I’ve not seen this before, and personally I think your problem is most likely your backend storage. How much log do you have? If your logs aren’t very big, I would recommend you to attempt to use a different backend storage, even if just to test and see if it’s any different. If you don’t have access to S3 Minio is pretty easy to set up locally for a quick test.

Just to give you an update and further details.

As we’ve turned towards the backend, I had to admit that besides an S3 migration, the new S3 backend is different: it is a versioned S3 bucket with replication enabled (that replication has been disabled meantime).

I’ve migrated the data back to an other S3 bucket that is not versioned and the logs seems to be persistent and available since then. I don’t think that the Loki backend would have a different code path for versioned buckets or that it would manipulate the S3 object versions directly if they are available, but I cannot exclude this immediately. Does Loki have special logic for versioned S3 buckets?

I don’t think versioning has anything to do with it. We use versioned S3 buckets as well, and I haven’t seen this problem before.

But sounds like you were able to at least narrow down the problem. I’d double check the configurations for the S3 bucket that’s giving you problem again.

After a week of monitoring I can tell that the logs are still available and retrievable. Thanks Tony for your support!