I have kubernetes cluster where promtail is deployed as daemon set this promtail collects the logs fromm the pods running in the cluster, here is the configuration for the promtail I am using:

server:

log_level: info

log_format: logfmt

http_listen_port: 3101

clients:

- url: http://34.68.174.138:3100/loki/api/v1/push

positions:

filename: /run/promtail/positions.yaml

scrape_configs:

# See also https://github.com/grafana/loki/blob/master/production/ksonnet/promtail/scrape_config.libsonnet for reference

- job_name: kubernetes-pods

pipeline_stages:

- cri: {}

kubernetes_sd_configs:

- role: pod

relabel_configs:

- source_labels:

- __meta_kubernetes_pod_controller_name

regex: ([0-9a-z-.]+?)(-[0-9a-f]{8,10})?

action: replace

target_label: __tmp_controller_name

- source_labels:

- __meta_kubernetes_pod_label_app_kubernetes_io_name

- __meta_kubernetes_pod_label_app

- __tmp_controller_name

- __meta_kubernetes_pod_name

regex: ^;*([^;]+)(;.*)?$

action: replace

target_label: app

- source_labels:

- __meta_kubernetes_pod_label_app_kubernetes_io_instance

- __meta_kubernetes_pod_label_instance

regex: ^;*([^;]+)(;.*)?$

action: replace

target_label: instance

- source_labels:

- __meta_kubernetes_pod_label_app_kubernetes_io_component

- __meta_kubernetes_pod_label_component

regex: ^;*([^;]+)(;.*)?$

action: replace

target_label: component

- action: replace

source_labels:

- __meta_kubernetes_pod_node_name

target_label: node_name

- action: replace

source_labels:

- __meta_kubernetes_namespace

target_label: namespace

- action: replace

replacement: $1

separator: /

source_labels:

- namespace

- app

target_label: job

- action: replace

source_labels:

- __meta_kubernetes_pod_name

target_label: pod

- action: replace

source_labels:

- __meta_kubernetes_pod_container_name

target_label: container

- action: replace

replacement: /var/log/pods/*$1/*.log

separator: /

source_labels:

- __meta_kubernetes_pod_uid

- __meta_kubernetes_pod_container_name

target_label: __path__

- action: replace

regex: true/(.*)

replacement: /var/log/pods/*$1/*.log

separator: /

source_labels:

- __meta_kubernetes_pod_annotationpresent_kubernetes_io_config_hash

- __meta_kubernetes_pod_annotation_kubernetes_io_config_hash

- __meta_kubernetes_pod_container_name

target_label: __path__

limits_config:

tracing:

enabled: false

Loki is running on a cerntal server with the following configuration:

auth_enabled: false

chunk_store_config:

max_look_back_period: 0s

compactor:

shared_store: filesystem

working_directory: /data/loki/boltdb-shipper-compactor

ingester:

chunk_block_size: 262144

chunk_idle_period: 3m

chunk_retain_period: 1m

lifecycler:

ring:

replication_factor: 1

max_transfer_retries: 0

wal:

dir: /data/loki/wal

limits_config:

enforce_metric_name: false

max_entries_limit_per_query: 5000

reject_old_samples: true

reject_old_samples_max_age: 168h

memberlist:

join_members:

- 'loki-memberlist'

schema_config:

configs:

- chunks:

period: 24h

prefix: chunk_loki_

index:

period: 24h

prefix: index_loki_

object_store: gcs

schema: v11

store: boltdb-shipper

server:

grpc_listen_port: 9095

http_listen_port: 3100

storage_config:

boltdb_shipper:

active_index_directory: /data/loki/index

cache_location: /data/loki/index_cache

cache_ttl: 24h

resync_interval: 5s

shared_store: gcs

filesystem:

directory: /data/loki/chunks

gcs:

bucket_name: np-monitoring-infra-loki-us-ct1-gcs

table_manager:

retention_deletes_enabled: false

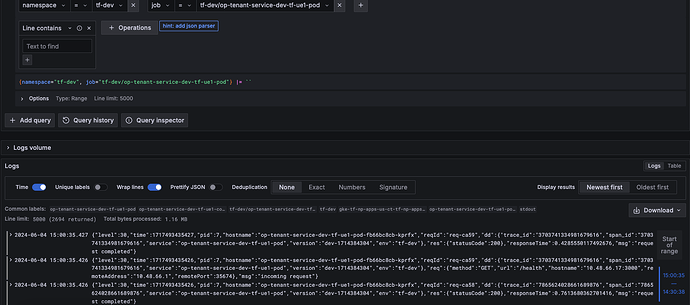

In the grafana when i query the logs for most of the service I am able to get the logs properly as shown in the below screenshot:

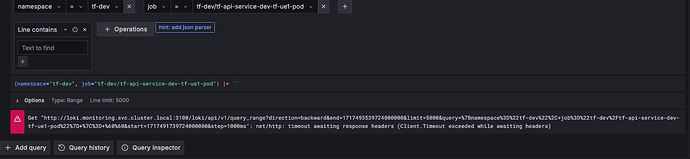

But for a particular service which is generating bulk and lengthy logs I am getting below error

How do i resolve this to be able to properly get the logs?