Hi all,

Completely flummoxed by what I had always assumed was the simplest query there is:

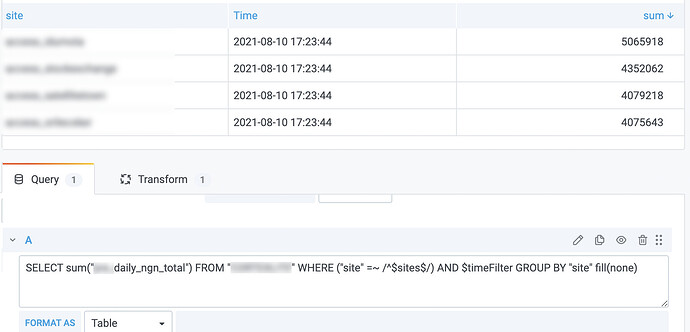

SELECT sum("daily_ngn_total") FROM "MEASUREMENT" WHERE ("site" =~ /^$site$/) AND $timeFilter GROUP BY "site"

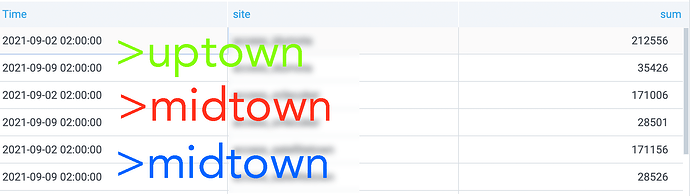

The sum this gives is completely off (too high by a factor of 5), and count("daily_ngn_total") gives me 144 rather than the expected 30 over 30 days. (This metric is calculated and injected once a day, and the query is supposed to add it up over a selected time range.)

Playing with fill parameters and the min. interval and Max data points query options doesn’t help.

Tried various math with count("daily_ngn_total") and with $__interval_ms . This works for a few time ranges, but not all.

These folks seem to have had the same issue, Time(interval) grouping and data scaling - #10 by kostyantynartemov, but I doubt any of this works for varying time ranges, and I have to say I’m a bit shocked that this is actually an issue in InfluxDB.

Or am I missing anything?

Also, does anyone know if this is addressed in v2.0?

Thanks!