Hi @Lightlore

Thanks for sharing a script. I built a similar one, and used a random function to decide when the user account is correct or not. See it below:

import { group, check } from 'k6';

import http from 'k6/http';

import { Trend, Rate, Counter } from 'k6/metrics';

import { textSummary } from 'https://jslib.k6.io/k6-summary/0.0.2/index.js';

import { randomIntBetween } from 'https://jslib.k6.io/k6-utils/1.2.0/index.js';

let trendMyService1ListAccounts = new Trend("api.my.service1-ListAccounts");

let counterMyService1ListAccounts = new Counter('api.my.service1-ListAccounts_count');

let errorRateMyService1ListAccounts = new Rate('api.my.service1-ListAccounts_errors#');

export const options = {

vus: 10,

iterations: 10,

thresholds: {

'api.my.service1-ListAccounts_errors#': [

// more than 10% of errors will abort the test

{ threshold: 'rate < 0.1', abortOnFail: false, delayAbortEval: '10s' },

],

},

};

export default function () {

group("MyService1.ListAccounts", function () {

let res, result;

res = http.get('https://httpbin.test.k6.io/anything');

trendMyService1ListAccounts.add(res.timings.duration);

counterMyService1ListAccounts.add(1);

result = check(res, {

'api.my.service1-ListAccounts response status code was 200': (res) => res.status == 200,

//'api.my.service1-ListAccounts response content ok (includes testAccount)': (res) => res.body.includes("testAccount")

'api.my.service1-ListAccounts response content ok (includes testAccount)': randomIntBetween(0, 1), // make it random

});

if (!result) {

console.log("failed account");

errorRateMyService1ListAccounts.add(true);

} else {

console.log("correct account");

errorRateMyService1ListAccounts.add(false);

}

});

}

export function handleSummary(data) {

return {

'stdout': textSummary(data, { indent: ' ', enableColors: true }), // Show the text summary to stdout...

'summary.json': JSON.stringify(data), // and a JSON with all the details...

};

}

I believe you should be able to get both test outputs, the stdout with the coloring, and the json file.

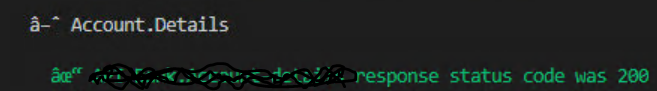

If I run the script above, I get the following:

And this is the summary.json:

{

"root_group": {

"checks": [],

"name": "",

"path": "",

"id": "d41d8cd98f00b204e9800998ecf8427e",

"groups": [

{

"name": "MyService1.ListAccounts",

"path": "::MyService1.ListAccounts",

"id": "078ec6cf6c764261a9dcbb1eb54a285c",

"groups": [],

"checks": [

{

"name": "api.my.service1-ListAccounts response status code was 200",

"path": "::MyService1.ListAccounts::api.my.service1-ListAccounts response status code was 200",

"id": "df25ea4a97db37eae0cf92d216af9016",

"passes": 10,

"fails": 0

},

{

"name": "api.my.service1-ListAccounts response content ok (includes testAccount)",

"path": "::MyService1.ListAccounts::api.my.service1-ListAccounts response content ok (includes testAccount)",

"id": "0e5d8efd060f7c195a3f2197ea5ca646",

"passes": 4,

"fails": 6

}

]

}

]

},

"options": {

"summaryTrendStats": [

"avg",

"min",

"med",

"max",

"p(90)",

"p(95)"

],

"summaryTimeUnit": "",

"noColor": false

},

"state": {

"isStdOutTTY": true,

"isStdErrTTY": true,

"testRunDurationMs": 462.359

},

"metrics": {

"iteration_duration": {

"type": "trend",

"contains": "time",

"values": {

"avg": 453.81361239999995,

"min": 439.7815,

"med": 455.4631875,

"max": 461.801916,

"p(90)": 461.79374129999997,

"p(95)": 461.79782865

}

},

"api.my.service1-ListAccounts_errors#": {

"type": "rate",

"contains": "default",

"values": {

"rate": 0.6,

"passes": 6,

"fails": 4

},

"thresholds": {

"rate < 0.1": {

"ok": false

}

}

},

"http_req_tls_handshaking": {

"type": "trend",

"contains": "time",

"values": {

"min": 125.353,

"med": 140.8875,

"max": 149.595,

"p(90)": 148.1271,

"p(95)": 148.86105,

"avg": 139.59390000000002

}

},

"group_duration": {

"type": "trend",

"contains": "time",

"values": {

"avg": 453.56348349999996,

"min": 439.769875,

"med": 455.13435400000003,

"max": 461.502834,

"p(90)": 461.4968337,

"p(95)": 461.49983385

}

},

"http_req_connecting": {

"type": "trend",

"contains": "time",

"values": {

"p(95)": 106.3496,

"avg": 106.0907,

"min": 105.896,

"med": 105.9825,

"max": 106.364,

"p(90)": 106.3352

}

},

"data_received": {

"type": "counter",

"contains": "data",

"values": {

"count": 60510,

"rate": 130872.33080787872

}

},

"http_req_waiting": {

"type": "trend",

"contains": "time",

"values": {

"avg": 105.28280000000002,

"min": 103.029,

"med": 104.94749999999999,

"max": 108.248,

"p(90)": 107.02759999999999,

"p(95)": 107.6378

}

},

"iterations": {

"values": {

"count": 10,

"rate": 21.628215304557713

},

"type": "counter",

"contains": "default"

},

"http_req_receiving": {

"values": {

"p(95)": 0.05074999999999999,

"avg": 0.027200000000000002,

"min": 0.012,

"med": 0.024,

"max": 0.062,

"p(90)": 0.0395

},

"type": "trend",

"contains": "time"

},

"api.my.service1-ListAccounts_count": {

"type": "counter",

"contains": "default",

"values": {

"count": 10,

"rate": 21.628215304557713

}

},

"api.my.service1-ListAccounts": {

"type": "trend",

"contains": "default",

"values": {

"avg": 105.34559999999999,

"min": 103.076,

"med": 104.99000000000001,

"max": 108.354,

"p(90)": 107.076,

"p(95)": 107.715

}

},

"http_reqs": {

"type": "counter",

"contains": "default",

"values": {

"count": 10,

"rate": 21.628215304557713

}

},

"http_req_duration{expected_response:true}": {

"type": "trend",

"contains": "time",

"values": {

"p(90)": 107.076,

"p(95)": 107.715,

"avg": 105.34559999999999,

"min": 103.076,

"med": 104.99000000000001,

"max": 108.354

}

},

"data_sent": {

"type": "counter",

"contains": "data",

"values": {

"count": 4620,

"rate": 9992.235470705664

}

},

"http_req_blocked": {

"type": "trend",

"contains": "time",

"values": {

"avg": 348.0201,

"min": 333.44,

"med": 349.192,

"max": 358.268,

"p(90)": 356.6237,

"p(95)": 357.44584999999995

}

},

"http_req_failed": {

"type": "rate",

"contains": "default",

"values": {

"fails": 10,

"rate": 0,

"passes": 0

}

},

"http_req_duration": {

"type": "trend",

"contains": "time",

"values": {

"avg": 105.34559999999999,

"min": 103.076,

"med": 104.99000000000001,

"max": 108.354,

"p(90)": 107.076,

"p(95)": 107.715

}

},

"http_req_sending": {

"type": "trend",

"contains": "time",

"values": {

"max": 0.124,

"p(90)": 0.08080000000000001,

"p(95)": 0.10239999999999998,

"avg": 0.03560000000000001,

"min": 0.01,

"med": 0.020999999999999998

}

},

"checks": {

"contains": "default",

"values": {

"passes": 14,

"fails": 6,

"rate": 0.7

},

"type": "rate"

}

}

}

What we find is that it might look a bit counterintuitive, though I see the same in the stdout output. Maybe I’m interpreting your issues incorrectly, so feel free to correct me

In the test run I have 6 failed accounts:

INFO[0001] correct account source=console

INFO[0001] failed account source=console

INFO[0001] failed account source=console

INFO[0001] failed account source=console

INFO[0001] failed account source=console

INFO[0001] failed account source=console

INFO[0001] correct account source=console

INFO[0001] failed account source=console

INFO[0001] correct account source=console

INFO[0001] correct account source=console

What we see next is that the checks fail 6 out of 10 for the response content. Which is coherent with the above logs.

█ MyService1.ListAccounts

✓ api.my.service1-ListAccounts response status code was 200

✗ api.my.service1-ListAccounts response content ok (includes testAccount)

↳ 40% — ✓ 4 / ✗ 6

And the rate metric shows:

✗ api.my.service1-ListAccounts_errors#...: 60.00% ✓ 6 ✗ 4

That is, since we add true when the error occurs, it counts 6 true’s and 4 false’s. Being an error rate, the ✓ are actually the errors. And the rate is calculated correctly, 60% of my requests failed.

And this is the same we later see in the json summary:

"api.my.service1-ListAccounts_errors#": {

"type": "rate",

"contains": "default",

"values": {

"rate": 0.6,

"passes": 6,

"fails": 4

},

"thresholds": {

"rate < 0.1": {

"ok": false

}

}

}

It is a bit counterintuitive that it will say 6 passes and 4 failures in the json summary. However, I think it’s the same we see in the stdout output. With an error rate defined like this, the pass will mean an error was found, and be in the ✓ count for that metric.

That said, I always find this a bit counterintuitive, as my mind associates ✓ with pass and “good”, and ✗ with fail and “not good”.

Unless I interpreted this wrong, the results are coherent between stdout and json. Let me know if otherwise and I’ll have another look.

Cheers!